Experiment Evaluates The Effect Of Human Decisions On Climate Reconstructions

The first double-blind experiment analysing the role of human decision-making in climate reconstructions has found that it can lead to substantially different results.

Scientists aren’t robots, and we don’t want them to be, but it’s important to learn where the decisions are made and how they affect the outcome

Ulf Büntgen

The experiment, designed and run by researchers from the University of Cambridge, had multiple research groups from around the world use the same raw tree-ring data to reconstruct temperature changes over the past 2,000 years.

While each of the reconstructions clearly showed that recent warming due to anthropogenic climate change is unprecedented in the past two thousand years, there were notable differences in variance, amplitude and sensitivity, which can be attributed to decisions made by the researchers who built the individual reconstructions.

Professor Ulf Büntgen from the University of Cambridge, who led the research, said that the results are “important for transparency and truth – we believe in our data, and we’re being open about the decisions that any climate scientist has to make when building a reconstruction or model.”

To improve the reliability of climate reconstructions, the researchers suggest that teams make multiple reconstructions at once so that they can be seen as an ensemble. The results are reported in the journal Nature Communications.

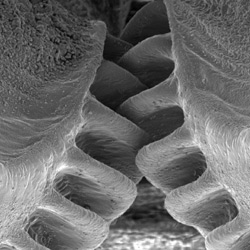

Information from tree rings is the main way that researchers reconstruct past climate conditions at annual resolutions: as distinctive as a fingerprint, the rings formed in trees outside the tropics are annually precise growth layers. Each ring can tell us something about what conditions were like in a particular growing season, and by combining data from many trees of different ages, scientists are able to reconstruct past climate conditions going back hundreds and even thousands of years.

Reconstructions of past climate conditions are useful as they can place current climate conditions or future projections in the context of past natural variability. The challenge with a climate reconstruction is that – absent a time machine – there is no way to confirm it is correct.

“While the information contained in tree rings remains constant, humans are the variables: they may use different techniques or choose a different subset of data to build their reconstruction,” said Büntgen, who is based at Cambridge’s Department of Geography, and is also affiliated with the CzechGlobe Centre in Brno, Czech Republic. “With any reconstruction, there’s a question of uncertainty ranges: how certain you are about a certain result. A lot of work has gone into trying to quantify uncertainties in a statistical way, but what hasn’t been studied is the role of decision-making.

“It’s not the case that there is one single truth – every decision we make is subjective to a greater or lesser extent. Scientists aren’t robots, and we don’t want them to be, but it’s important to learn where the decisions are made and how they affect the outcome.”

Büntgen and his colleagues devised an experiment to test how decision-making affects climate reconstructions. They sent raw tree ring data to 15 research groups around the world and asked them to use it to develop the best possible large-scale climate reconstruction for summer temperatures in the Northern hemisphere over past 2000 years.

“Everything else was up to them – it may sound trivial, but this sort of experiment had never been done before,” said Büntgen.

Each of the groups came up with a different reconstruction, based on the decisions they made along the way: the data they chose or the techniques they used. For example, one group may have used instrumental target data from June, July and August, while another may have only used the mean of July and August only.

The main differences in the reconstructions were those of amplitude in the data: exactly how warm was the Medieval warming period, or how much cooler a particular summer was after a large volcanic eruption.

Büntgen stresses that each of the reconstructions showed the same overall trends: there were periods of warming in the 3rd century, as well as between the 10th and 12th century; they all showed abrupt summer cooling following clusters of large volcanic eruptions in the 6th, 15th and 19th century; and they all showed that the recent warming since the 20th and 21st century is unprecedented in the past 2000 years.

“You think if you have the start with the same data, you will end up with the same result, but climate reconstruction doesn’t work like that,” said Büntgen. “All the reconstructions point in the same direction, and none of the results oppose one another, but there are differences, which must be attributed to decision-making.”

So, how will we know whether to trust a particular climate reconstruction in future? In a time where experts are routinely challenged, or dismissed entirely, how can we be sure of what is true? One answer may be to note each point where a decision is made, consider the various options, and produce multiple reconstructions. This would of course mean more work for climate scientists, but it could be a valuable check to acknowledge how decisions affect outcomes.

Another way to make climate reconstructions more robust is for groups to collaborate and view all their reconstructions together, as an ensemble. “In almost any scientific field, you can point to a single study or result that tells you what to hear,” he said. “But when you look at the body of scientific evidence, with all its nuances and uncertainties, you get a clearer overall picture.”

Reference:

Ulf Büntgen et al. ‘The influence of decision-making in tree ring-based climate reconstructions.’ Nature Communications (2021). DOI: 10.1038/s41467-021-23627-6

The text in this work is licensed under a Creative Commons Attribution 4.0 International License. Images, including our videos, are Copyright ©University of Cambridge and licensors/contributors as identified. All rights reserved. We make our image and video content available in a number of ways – as here, on our main website under its Terms and conditions, and on a range of channels including social media that permit your use and sharing of our content under their respective Terms.